Powering data centers is a challenge for utilities.

Data centers are highly valued by utilities because they consume large amounts of electricity with consistent, predictable demand patterns that remain steady throughout both the day and the year.

The explosive growth in power demand, driven largely by Artificial Intelligence (AI) and cloud computing, has overwhelmed the traditional electrical grid planning and construction timelines.

Introduction

New hyperscale data centers often require 100 MW to 500 MW of power, which is the demand of a small to medium-sized city. Utilities are happy to accept new business, but the problem is that data center developers want this power now and utilities are not prepared to respond so quickly. Expanding transmission and substation capacity through utilities can take 5 to 10 years due to lengthy processes for planning, permitting, environmental reviews, and construction. Data center developers, especially those focused on the AI race, prioritize “time to power” above almost all else. Delays mean lost competitive advantage and revenue. Developers are willing to pay a premium for faster power access and have taken some new and unique approaches for powering data centers.

The need for gigawatts of power on tight deadlines has forced data center developers to become major energy developers. They are doing this in three main ways:

- Funding Renewables via PPAs: Hyperscalers like Amazon, Microsoft, and Google are the world’s largest corporate buyers of clean energy. Their long-term Power Purchase Agreements (PPAs) provide the financial certainty needed for developers to build hundreds of new utility-scale wind and solar farms.

- On-Site, Grid-Independent Power: To bypass multi-year grid connection queues, developers are building their own on-site power. They have purchased natural gas turbines, fuel cells, and co-located them next to renewable power, independently of the local utility.

- Direct Connections to Power Plants: Data center campuses are now being planned and built adjacent to existing power plants. There are several major data center developers like Microsoft, Google, Meta, and Amazon web services that have signed PPA’s for existing nuclear power, like the Microsoft deal for a 20-year PPA to enable the restart of the shuttered Three Mile Island reactor in Pennsylvania. There is interest and research into PPA’s for new SMR, advanced, and full-scale nuclear power

Example of the new paradigm

The massive xAI “Colossus” data center project in Memphis, Tennessee, showcases a new paradigm for building AI infrastructure at incredible speed. To rapidly meet the massive power demands of the Colossus data center, xAI used portable or mobile natural gas-powered turbines which are typically used for disaster recovery or fast, temporary power generation. This resulted in legal challenges from environmental groups regarding air quality permits and were eventually removed.

Initial reports mentioned around 18-20 turbines, but later aerial images suggested as many as 35 turbines were installed and operating, with a combined capacity estimated at over 70 MW, though the total demand for Phase I was 150 MW. The TVA (Tennessee Valley Authority) Board of Directors officially approved the plan to supply a total of 150 MW of power to the xAI facility in November 2024.

The connection to the full 150 MW load required the construction of a new electric substation near the data center, which was paid for by xAI. By May 2025, the massive Colossus supercomputer facility was connected to the new substation, providing it with 150 MW of power from the MLGW/TVA grid.

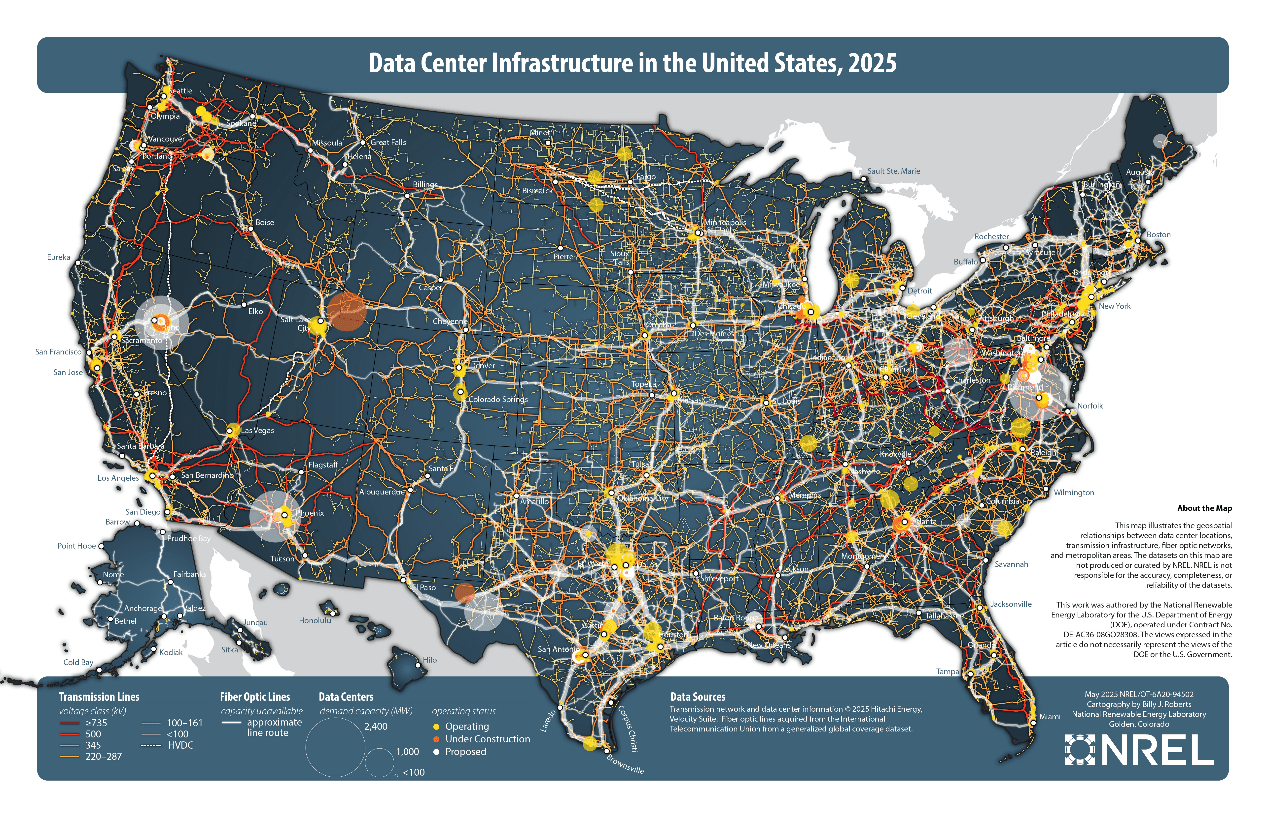

The map shows where new data centers are being built.

The map shows where new data centers are being built.

Data Centers planned in the US

While many data center plans are secrets, current expansion announcements focus on regions like:

- Northern Virginia (Ashburn/Loudoun & Prince William Counties): The largest existing and planned capacity globally.

- Phoenix, Arizona (Maricopa County): A major emerging market with high growth projected.

- Dallas-Fort Worth (DFW), Texas: Significant planned growth.

- Atlanta, Georgia (I-85 Corridor): High percentage growth projected, with major new investments.

- Salt Lake City, Utah: A fast-growing secondary market.

Impact on utilities and power costs.

There is fierce competition to build and power data centers unlike anything we have seen in the utility industry before, but there is also significant new power growth due to the growing power demands for electric powered transportation (mostly electric passenger cars) and to a lesser extent the electrification of HVAC and industrial electrification. The increased demand for power requires new utility investment in transmission, substations, and distribution.

The generation side is split between vertically integrated regulated utilities and Independent Power Producers (IPPs). Independent Power Producers (IPPs) have generally dominated the buildout of new capacity (especially renewables and battery storage), particularly in deregulated markets, because they can respond to market price signals and secure private long-term contracts (PPAs) faster than utilities navigating regulatory approval cycles.

Utilities remain the primary developers in the regulated markets and are also heavily investing in transmission and distribution infrastructure across all markets to physically connect the new generation built by both themselves and IPPs.

With data centers buying and building power there is a supply and demand issue that is driving up the cost of power. A small utility or municipal power company without generation buys power from IPP’s or other utilities suppliers and is competing with the data centers.

Utilities see data centers as great customers. They buy lots of power with steady daily and seasonal loads. They match up well to base load generators like nuclear or coal power and do not require oversized transformers or wires like a large level 3 EV charging facility would need. Of course, data center developers are concerned about power costs and new data centers have many ways they can be better customers and get better power rates from utilities. About 40% of the data center power goes to HVAC. There are ways of using thermal batteries to shift the HVAC load away from costly peak power hours typically 5-9pm There is a trend for data centers to transition to large grid scale batteries that are replacing the traditional UPS batteries. Such batteries can provide useful grid services to utilities as well as provide backup power to the data center. A town or utility that adds data centers to their grid will gain revenue for power sold. More revenue helps to cover the large overhead costs that utilities have for wires, poles, truck, staff, and buildings. This can reduce the overall cost of power in such towns or utility service areas

The Leading AI Model Developers

1. OpenAI (in partnership with Microsoft). Flagship Products: The GPT series ChatGPT Microsoft is their primary investor and exclusive cloud partner, integrating OpenAI’s models deeply into their own products like the Azure cloud platform and Microsoft Copilot.

2. Google (specifically Google DeepMind) Flagship Product: The Gemini family of models (including Gemini Pro, Ultra, and future versions).

3. Meta (formerly Facebook). Flagship Product: The Llama series of models (e.g., Llama 3).

4. Anthropic Flagship Product: The Claude family of models (e.g., Claude 3, Claude 3.5 Sonnet). They are a major competitor to both OpenAI and Google and are heavily backed by Amazon and Google.

5. xAI Flagship Product: Grok. Founded by Elon Musk, xAI aims to create an AI to “understand the true nature of the universe.”

6. DeepSeek AI. Flagship Product: The DeepSeek model family (e.g., DeepSeek-V2). They are a leading Chinese AI research lab that has released a series of extremely powerful open-source models that are highly regarded, particularly for their exceptional coding and mathematical reasoning capabilities.

Is there an investment bubble like the dot com bubble?

The short answer yes, the massive overspending by companies like Meta will shift from being first at all costs to a more rational return on investment criterion. However, the race is not stopping, and it is unlikely to see the AI race coming to a halt. Current spending projections are:

2025: ~$400 Billion The spending in 2025 is dominated by the massive capital investment in building the physical infrastructure for AI. Data center construction and the procurement of tens of billions of dollars’ worth of NVIDIA GPUs and other AI accelerators represent the largest share of this cost.

2026: ~$550 Billion The rapid year-over-year growth is driven by the ongoing AI arms race. As new, more powerful AI models are released, the demand for even larger data centers and next-generation GPUs continues to accelerate. Spending on the electrical infrastructure to power these facilities becomes a major and growing line item.

2030: Over $1.5 Trillion The leap to a multi-trillion-dollar run rate by 2030 is based on the widespread enterprise adoption of AI. By this time, spending will shift from being concentrated among a few hyperscaler’s to being broadly distributed as thousands of companies build their own smaller AI systems and pay for massive amounts of AI-powered cloud services.

Electric Power: This is the fastest-growing operational cost. Powering the millions of GPUs in these data centers is projected to become a multi-hundred-billion-dollar annual expense by the end of the decade, making energy the primary long-term bottleneck for AI growth.

The race to develop the best AI applications that will provide your news, your library, your entertainment, your education, and maybe even your companionship. The AI investment race is showing early signs of potential market saturation and risk, but it is unlikely to subside completely due to fundamental differences from the dot-com bubble. Instead, most analysts predict a shift toward consolidation, disciplined spending, and a focus on profitability. The shake out could result in a small group of winners emerging, but the money for better AI models and new applications will keep flowing. This “AI Oligopoly” may be the current hyperscalers: Microsoft/OpenAI, Google, Amazon (with Anthropic), and Meta. The prize is not primarily scientific or industrial AI. It is about owning influence: I.e. the source of truth, knowledge, advertising, guiding your purchases, owning your news, owning your screen time, being your trusted teacher, partner, and friend. Having the best AI frontier model and model user interfaces is the key to success.

|

The unprecedented demand in the US for lightning-fast power connections by developers of data centers is not matching traditional ways utilities provide power to new customers. As a result, there are a range of new and creative ways to provide that power. Developers are building their own power generation and microgrids. Data centers are becoming power companies themselves. They are building large BESS battery systems that not only provide for UPS power backup but provide grid services to utilities. Utilities and data center developers are collaborating on building new power generation, new or upgraded substations, and the power lines to meet the power and reliability requirements of data centers.

Data centers are a prized customer for utilities, they consume lots of steady power around the clock and throughout the seasons and they often have far more flexibility to provide ancillary services to the utility than typical residential, commercial or industrial customers. While they are schedule driven, they are less sensitive to the price of power in the short term as the AI race has focused on securing power faster than competitors to get the best AI models sooner and lock in a customer base with superior AI applications.

Hyperscalers have created shorter term PPAs for fossil power and long term PPA’s for massive quantities of renewable power and have memorandums of understanding for future nuclear power that may come from new SMR and advanced reactors. While data center loads match up well to base load generation like nuclear or coal, they are often powered by intermittent generation like solar and wind with battery storage.

Data center developers seek out locations that can provide power quickly, have the water and land resources needed and where local zoning and community are favorable. They are also building where it will be easy to expand in the future.

EV batteries are trending to charge at faster rates. Large high voltage DC EV charging stations can require massive power to charge dozens of cars simultaneously and utilities need a strong grid to service this growing load. Most EV charging occurs at home and distribution utilities are adapting to new loads with more powerful transformers and related low and medium voltage distribution infrastructure. New loads for HVAC and industrial electrification are steadily increasing over the next decade and beyond.

AI developers need more than just electric power to win the AI race. They need to train on accurate but diverse curated data. This includes selecting the most appropriate model architecture and employing techniques like Active Learning (to find the most useful data to train on) and Data Distillation (to reduce the size of the dataset without losing quality). They start with peta-bytes of data from public, private, and internally generated sources. This massive raw data pool is labeled, filtered, cleaned, and tokenized (broken down into the pieces the model understands). This step dramatically reduces the final size of the data AI uses for training. Data centers also need secure, reliable, and fast data connectivity.

The US is behind in securing new power. China already has a grid that is larger than the US and European grids combined and while NVIDIA GPU chips are restricted, China is in a far better position to provide power to AI Data centers compared to the US. The table below shows estimated grid power additions to 2030, and China is outpacing the US in every power sector.

| Grid Energy | Global Additions in 2024 (GW) | US Additions 2025 to 2030 i.e., five years (GW) |

China Additions 2025 to 2030 i.e., five years (GW) |

Global Additions 2025 to 2030 i.e., five years (GW) |

| Solar | 452 | 220 to 270 | 1,200 to 1,500 | 3000 to 4000 |

| Wind | 113 | 60 to 75 | 400 to 500 | 600 to 700 |

| Coal | 44.1 | -50 to -70 | 120 to 180 | 160 to 240 |

| Gas and Oil | 25.5 | 25 to 35 GW | 70 to 100 | 190 to 260 |

| Hydro | 24.6 | 2 to 4 | 60 to 80 | 125 to 175 |

| Nuclear | 6.8 | ~2 GW (uprating only) | 30 to 40 | 50 to 70 |

| Biofuel | 4.6 | 1 to 2 | 8 to 10 | 30 to 40 |

| Geothermal | 0.4 | 2 to 3 | 2 to 3 | 10 to 15 |

Recent US policies are discouraging solar, wind, and battery storage, which is slowing the deployment of the cheapest, cleanest, and fastest deploying sources of new power. US policy is supporting more gas and nuclear power, but new gas power plants have supply chain constraints like gas turbines, so these power sources are not matching the demands of data center developers. This constrained power supply threatens to inflate electricity prices for consumers and businesses and risks leaving the nation unable to cleanly and affordably meet the surging power demands of data centers and broader electrification.