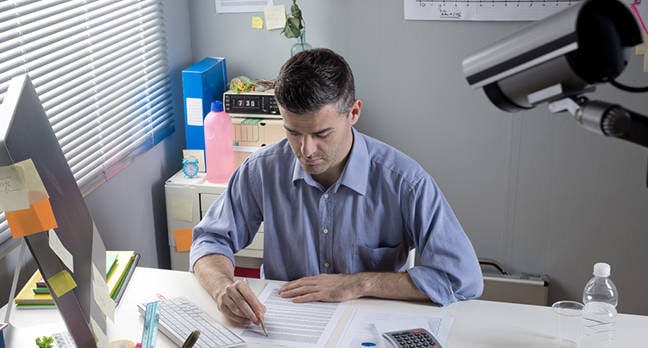

Workplace Surveillance Booming During Pandemic, Destroying Trust In Employers

An explosion in workplace monitoring during the pandemic – in part supported by common software tools from global vendors – threatens to erode trust in employers and employees' commitment to work, according to a European Commission research paper.

The study from the Joint Research Councils warns that excessive monitoring has negative psycho-social consequences including increased labour resistance, stress and turnover propensity, along with decreased job satisfaction and organisational commitment.

Meanwhile, a report published today from the UK's All-Party Parliamentary Group on the Future of Work said that "use of algorithmic surveillance, management and monitoring technologies that undertake new advisory functions, as well as traditional ones, has significantly increased during the pandemic."

The report calls on the government to "regulate for real accountability" of AI in the workplace, including monitoring technologies.

The Joint Research Councils report – Electronic Monitoring and Surveillance in the Workplace – said workplace monitoring, often supported by AI technologies, had expanded through both the gig economy using common platforms such as Uber, Amazon's Mechanical Turk, freelancing system Upwork, and common office-based systems like Microsoft Teams, Google Workspace, Salesforce, and Slack.

Each trend presents different dangers and requires different responses, the report's author, St Andrews University School of Management professor Kirstie Ball, told The Register.

"With the pandemic, many people began working remotely and were distanced from colleagues, but started to feel monitoring was a lot more pervasive. If it does feel that way, then you get into problematic health and safety and psycho-social risks with workers. There is a decrease in job satisfaction, a decrease in maybe commitment to work, a decrease in trust in the workplace, increases in stress, and higher turnover potential."

The surveillance of employees working remotely during the pandemic has intensified, with the accelerated deployment of keystroke, webcam, desktop and email monitoring in mainland Europe, the UK, and the US, according to her report based on an analysis of 398 articles.

In December last year, Microsoft promised to wind back "productivity scores" for individual users following an outcry from privacy campaigners.

There was a danger that managers may not fully appreciate the biases and limitations of workplace-monitoring technology, Ball said. "And there is the fact that they might think that because it's AI, it's got to be right. In the past, employee surveillance debates were relegated to certain industries... but now it's affecting everyone's perspective, every type of work that's out there."

Meanwhile, platforms for gig work present a different set of problems, she said. While some use them for second jobs, or as a way to support other interests, many people rely on the platform as their main source of income. Monitoring is rife, and often the outcomes are inexplicable, Ball said.

"You have very little control over your work allocation on a platform. Because it's algorithmically governed, what you get paid is dependent on your customer ratings and past performance. There's no clear route through career development – although some platforms are looking at that. It's like a pay-per-job work experience which is very precarious, and people have absolutely no rights at all."

- When AI and automation come to work you stress less – but hate your job more

- This always-on culture we're in is awful. How do we stop it? Oh, sorry, hold on – just had another notification

- Chin up, weary key workers: Google's pushing out a Workspace for frontliners

- Labour Party urges UK data watchdog to update its Code of Employment Practices to tackle workplace snooping

Workers on such platforms can be fired with little warning or explanations, based on the results of monitoring data and algorithmic decision making. Earlier this year, it emerged that Amazon drivers were being algorithmically fired by email for elements of work beyond their control.

The shocking testimonies provided an illustration of the problems of monitoring in platform work.

"Your work can be rejected without explanation, and you can also be fired – have your account suspended," Ball said. "There are certain things that you mustn't do on a platform. You can get suspended for speaking to customers too much, criticising the platform too much, raising too many problems... And there are lots of ways in which you can get suspended."

The UK's All-Party Parliamentary Group on the Future of Work – jointly chaired by Conservative MP David Davis, Labour MP Clive Lewis, and Labour Lord Jim Knight – said workplace monitoring was an example where the introduction of AI need better regulation.

Use of algorithmic surveillance, management, and monitoring technologies that undertake new advisory functions, as well as traditional ones, had significantly increased during the pandemic, according to witnesses Abigail Gilbert of the Institute for the Future of Work, Trades Union Congress policy officer Mary Towers, and Oxford University law professor Jeremias Adams-Prassl.

"Pervasive monitoring and target setting technologies, in particular, are associated with pronounced negative impacts on mental and physical wellbeing as workers experience the extreme pressure of constant, real-time micro-management and automated assessment," the report said.

"AI is transforming work and working lives across the country in ways that have plainly outpaced, or avoid, the existing regimes for regulation. With increasing reliance on technology to drive economic recovery at home, and provide a leadership role abroad, it is clear that the government must bring forward robust proposals for AI regulation to meet these challenges."

A poll by union Prospect found nearly one in three workers are being monitored at work, up from a quarter in April. “New technology allows employers to have a constant window into their employees homes, and the use of the technology is largely unregulated by government," said general secretary Mike Clancy.

Commenting on the Prospect commissioned poll, released a week ago, Chi Onwurah, MP and Labour's Shadow Digital Minister, said:

"The bottom line is that workers should not be subject to digital surveillance without their informed consent, and there should be clear rules, rights and expectations for both businesses and workers."

The Information Commissioner's Office is updating its Employment Practices Code and put a call out for comments from businesses of all sizes, workers, trades unions, and professional and trade bodies in August. The consulation closed on 28 October.

The UK government published an AI Strategy in September. But it makes vague commitments on regulation.

"We will consider what outcomes we want to achieve and how best to realise them, across existing regulators' remits and consider the role that standards, assurance, and international engagement plays," the strategy document says. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more