Things Are Going To Get Weird As The Nanometer Era Draws To A Close

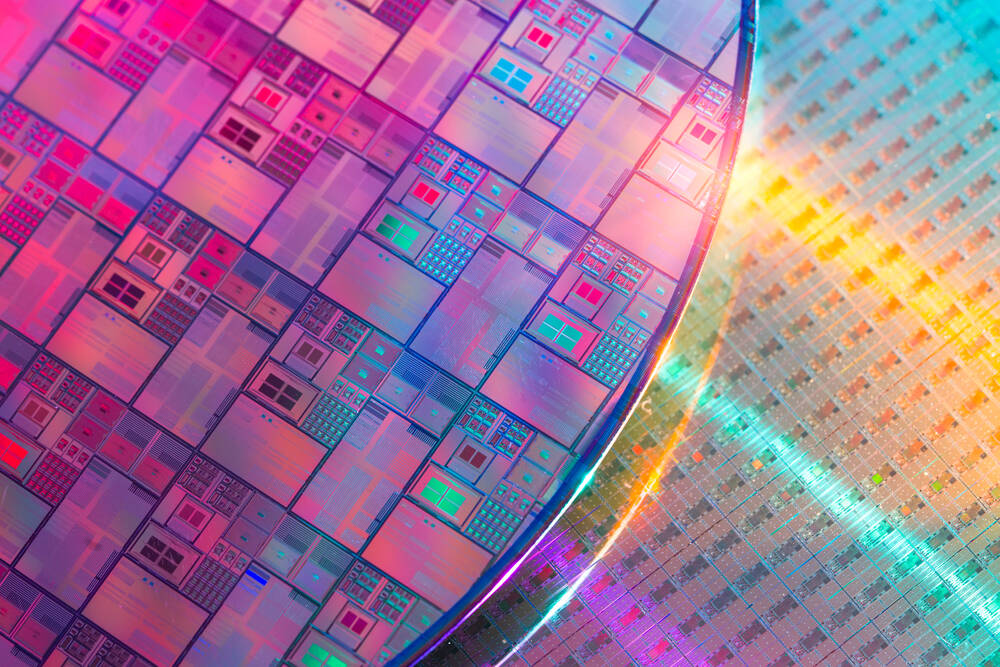

Comment With 3nm production reaching maturity and 2nm on the way, TSMC is reportedly laying the groundwork for the next logical step, a 1nm fab.

According to Taiwanese media, TSMC has its sights set on building advanced chip kit in the island nation's Chiayi Science Park, with a plan to produce state-of-the-art chips based on a 1nm process. The news serves as a reminder that the nanometer era of semiconductor manufacturing is rapidly drawing to a close, at least at the higher end. We're confident bog-standard microcontrollers, power and analog electronics, low-complexity and legacy ICs, and other such fare will remain on more modest process nodes for some time.

Samsung is ramping 3nm production and is slated to roll out its 2nm processes in 2025, and Intel's next-gen 20A — representing 20 angstroms or about two nanometer — process tech is slated to debut later this year, assuming there are no delays.

But while nanometers and angstroms get thrown around to describe improvements in process tech, the meaning has changed considerably over the past decade as transistor tech has matured. And as the wheels come off Moore's law, and generational process improvements become less impactful, several emerging technologies to boost performance and density are taking precedence.

From measurement to marketing

Prior to 2011, most chips used planar transistors and nanometer to describe the physical gate length, and were thus representative of transistor size. The move to FinFET transistors around this time rendered nanometers as a descriptor inappropriate, yet the practice continued with the descriptor largely becoming symbolic of equivalent density.

Intel CEO Pat Gelsinger highlighted this point as justification for rebranding its own process tech to better align with TSMC and Samsung's naming conventions shortly after his return to the x86 titan in 2021.

Suddenly, Intel's 10nm process became Intel 7 and its 7nm process became Intel 4 and Intel 3. While clearly a marketing stunt designed to distract from the fact Intel had fallen behind on process tech, Gelsinger also wasn't wrong. The nanometer as a metric for process tech is a marketing tool to describe improvements in transistor density, and given that fact, rebranding was a smart move.

But, since nanometers only loosely describe relative density and there's no standardization, comparing one foundry operator's process tech to another becomes a lot more complicated.

- ASML orders boom but export restrictions could hamper growth

- Mystery German chip fab sips on Gradiant's ultrapure water

- Asia beat US, EU in chip building because the West didn't invest, Intel CEO claims

- 2024 sure looks like an exciting year for datacenter silicon

A key factor is that as we try to shrink FinFETs to pack more of these transistor gates on silicon dies or the same amount on smaller dies, to give users better components overall, we've reached the point of diminishing returns. Due to the physics involved, it is increasingly difficult to achieve good performance with reasonable power consumption.

Next-gen process nodes will use gate-all-around (GAA) transistors or what Intel has taken to call RibbonFETs.

You can think of a RibbonFET or GAA transistor as a normal FinFET with not one source-drain channel running through a fin-like gate but a stack of separate ribbons of source-drain channels all running through the same gate fin. This increases the surface area of where the channel meets the gate, reducing current leakage, and allows engineers to further shrink their transistors in a viable fashion. This boosts transistor density, which for users typically means better performance without disastrous power efficiency.

Samsung's 3nm process already uses this tech, while Intel 20A and TSMC's upcoming 2nm process nodes will implement the technique.

The low-hanging fruit are gone

Improvements to the way chips are packaged and the delivery of power to their circuitry has helped offset the FinFET shrink problem among other benefits. But still, we're currently left with large power-hungry dies at the high end.

Nvidia's H100 GPU, for example, is close to the reticle limit at 814mm2. These large-area chips likely have glum yields since you can only pack so many onto a wafer and some will be defective one way or another.

This is why chip designers are increasingly relying on advanced packaging to combine multiple smaller chiplets into one big CPU or GPU within a processor package. AMD's Zen family and Intel's GPU Max cards are prime examples of what's possible using advanced packaging.

Arguably the more interesting development, however, is happening around power delivery. Intel has been particularly outspoken about its progress in power delivery. In a nutshell, modern processors are a rat's nest of nanoscale data and power wires that are laid down layer by layer during the manufacturing process. By moving to backside power delivery, foundry operators hope to unlock efficiency gains by simplifying this routing.

In a sense, all the low-hanging fruit in process design has been gathered and now chip builders are having to reach higher and work harder to push the envelope. From here things start to get weird.

Up to this point, advanced packaging has largely been homogenous in the sense that one vendor is designing and implementing the chips. This will start to change as technologies like Universal Chiplet Interconnect Express (UCIe) opens the doors to heterogeneous packages. Imagine an AMD GPU and Intel CPU dies sharing a common socket. Will this specific example ever happen? Maybe not — technically Intel and AMD have already tried this and it didn't work out — but UCIe opens the doors to these kinds of chiplet architectures, even if it creates some new headaches along the way.

Even the substrates on which those chiplets are packaged are being reexamined. Last year, Intel revealed it was working on glass substrates on which can support denser, hotter arrays of chiplets without warping. As strange as it sounds, Intel says the tech is only a few short years away.

Others, meanwhile, are exploring the use of silicon photonics as a means to shuttle data between those chiplets. You see, the use of multiple dies lets us build bigger more complex accelerators, but also introduces challenges with respect to data movement. Lightmatter's Passage, Celestial AI's Photonic Fabric, and Ayar Labs' TeraPHY are a few examples of how folks are trying to alleviate these challenges by bringing optical data links directly to the silicon.

While advances in process tech remain important, factors like packaging, power delivery, and signaling are arguably as important, and as time goes on will arguably become more so. So, unless someone stumbles upon some miracle solution for continuing Moore's Law, chip manufacturing is going to be awfully or perhaps delightfully weird over the next decade or so. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more