Tachyum Says Someone Will Build 50 ExaFLOPS Super With Its As-yet Unfinished Chips

Interview Tachyum's first chip Prodigy hasn't even taped out - let alone gone into mass production - but one customer has, we're told, committed to buying hundreds of thousands of the processors to power a massive 50 exaFLOPS supercomputer.

Usually when we see numbers like that, the obvious assumption is they're talking about AI FLOPS using 8- or 16-bit floating-point math precision, not the 64-bit double-precision calculations typically used in high-performance computing. But Tachyum claimed the system will be capable of 25x the performance of "the world's fastest conventional supercomputer built just this year."

This appears to be a reference to the newly inaugurated Aurora supercomputer at Argonne National Labs, which boasts more than two exaFLOPS of peak FP64 performance.

If Tachyum's claim wasn't already wild enough, the processor designer claims the forthcoming system will be capable of eight zetaFLOPS of AI performance for large language models and will boast hundreds of petabytes of DDR5 memory, when it's completed in 2025.

A paper Prodigy

To fully understand the scope of what Tachyum is planning we need to take a closer look at the chip the company has spent the past few years developing and redeveloping.

Tachyum describes Prodigy as a universal processor. As the name suggests, this isn't some specialized chip designed solely to accelerate AI or HPC workloads. It's intended as a general-purpose component that can run any workload you might throw at. The emulator QEMU has been ported to Prodigy's architecture to run today's x86, Arm, and RISC-V code.

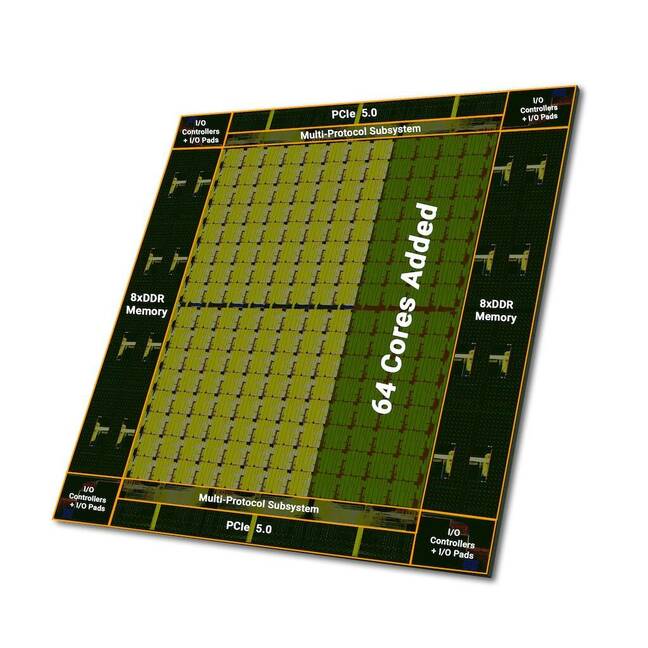

After ditching IP supplier Cadence as part of a lawsuit last year, Prodigy has got another redesign now with 192 cores. Click to enlarge

It's "a CPU which integrates AI and HPC for free. That's our story," CEO Radoslav Danilak told The Register.

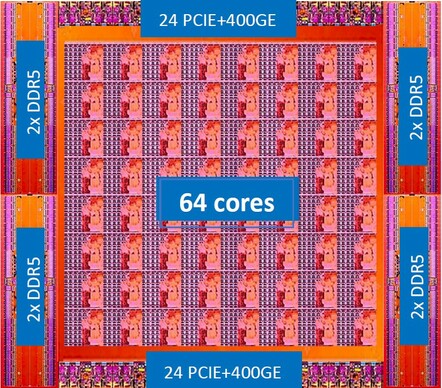

Taking a look at Tachyum's latest renders, we can see most of Prodigy's 600mm2 die is dedicated to its 192 64-bit processor cores. So it'll also be a decently large chip, but not as big as Nvidia's GH100 at 814mm2. The cores feature a custom instruction set architecture and will execute four out-of-order instructions per clock cycle at frequencies in excess of 5 GHz, according to the data sheet [PDF] at least.

To appeal to the Big Iron markets the cores will feature double 1024-bit vector processing capabilities with native support for matrix math. This Danilak said increases the "amortization of the CPU overhead fetch, decode, scheduling, and so on compared to data path by order of magnitude."

According to Tachyum, these cores are also fast, with the top-specced part supposedly capable of 90 teraFLOPS of FP64 performance and 12 petaFLOPS of FP8 with sparsity. By employing greater degrees of scarcity the upstart biz boasts that the chip will be capable of 48 petaFLOPS.

"We will be showing, in the next 30 days — publishing a paper — these measurements from these industry standard benchmarks, where we basically achieve, including training, two bits per weight," Danilak said.

To put those numbers in perspective, Tachyum is essentially saying its chip is going to deliver 3x the performance of Nvidia's H100 SXM modules in both HPC and AI workloads. Though - we'll note that given the timeline - the H100 more than likely won't be the chip Prodigy has to contend with; we expect Nvidia to announce its next-gen accelerator next spring.

However, one area that Prodigy falls behind Nvidia is memory bandwidth. With 16 channels of DDR5 and support for 7,200 MB/s memory when it becomes available, those cores are being fed by 921 GB/s of bandwidth.

This Danilak admits "is not enough," but notes his engineers have developed "bandwidth amplification technology, which is a cute word, but basically we have bandwidth compression for double precision, single precision, and so on ... So we have kind-of two terabytes per second."

By comparison, most other chips built with AI in mind rely on cramming as much high-bandwidth memory (HBM) around the compute die as possible. The advantage of HBM is bandwidth. The HBM3e memory used in the latest iteration of Nvidia's GH200s — the name for its Grace Hopper CPU-GPU chips — boosts bandwidth to 5 TB/s.

The downside to HBM is capacity. You can only pack so many modules around a die before you run out of space. But, with 16 memory channels each supporting two DIMMs, Tachyum says the chip can support up to 2 TB per socket.

An 'effing big machine'

Even if Prodigy turns out to be as powerful as Tachyum hopes, a 50 exaFLOPS supercomputer is going to be absolutely massive. "It's a huge, big, effing big machine," Danilak exclaimed.

Taking Tachyum at their word and assuming Prodigy will actually manage 90 teraFLOPS of FP64 performance per socket, we estimate such a machine would require about half a million chips. Based on a whitepaper detailing a smaller system released earlier this year, that big beast will work out to just under 1,600 88U cabinets.

Danilak tells us our "math is not very far off," and that the machine – ordered by a US customer – will require "a few hundreds of thousands of sockets. We are not disclosing the exact number."

What we do know is the servers are to be networked using 400 Gb/s RDMA over Converged Ethernet (ROCE) using tech supplied by a third-party vendor, though like many facets of the system, Tachyum isn't saying which just yet.

Compared to the largest supercomputers in the US, the machine detailed by Tachyum is much, much larger. Argonne National Lab's Aurora system completed in June packs 10,624 compute blades containing 63,744 Intel Ponte Vecchio GPUs and 21,248 Intel Xeon Max Series processors into 166 racks and is expected to consume on the order of 60MW.

It's not clear how much power a 50-exaFLOPS system running on Prodigy will consume, though the whitepaper offers some clues. In that example, the company estimated it could squeeze 3.3 exaFLOPS of FP64 performance into 6,000 square feet of floor space on a 45.2MW power budget. Extrapolating that out, a 50-EF machine would need on the order of 685MW of power.

Danilak assures us this won't be the case, and the power consumption won't scale linearly from that example. "Power is the most difficult problem on the project," he added. "You cannot get 200MW, but 700MW is not doable either."

While he didn't disclose where in the United States the facility would be built, he did say power was being taken into consideration. "To make it clear, we are not saying we will do the system level installation. We don't have resources for that," Danilak emphasized. "The contract is focusing on delivery of the chips."

In other words, if or how Tachyum's mystery customer intends to power such a system isn't really the chip house's problem — they just need to provide the silicon.

- Atos subsidiary Eviden scores contract win in Europe's first exascale system

- From vacuum tubes to qubits – is quantum computing destined to repeat history?

- Why can't datacenter operators stop thinking about atomic power?

- Our AI habit is already changing the way we build datacenters

A long and bumpy road

If you've noticed we keep saying "will" and not "does" when talking about Prodigy, that's because that despite insisting work on the massive supercomputer will begin next year, Tachyum has yet to tape out the chip for production. So for the moment, Prodigy only really exists on paper, in simulations, or in hardware emulation running on a bank of FPGAs.

"We are almost there; we are not there yet," Danilak said.

In the five years since Tachyum first announced development of Prodigy, the company has announced plans to tape out only for roadblocks to stall the project. And with each the chip has grown more ambitious.

When our sibling site The Next Platform first looked at Prodigy, the top-end part was slated to have 64 cores and tape out by the end of 2020. The expected tape out date then slipped by two years but doubled the core count to 128. But before that could happen the biz changed electronic design automation (EDA) vendors and bumped the core count to 192 cores, but again lost time in the process.

"The tools behave differently. The turn of knobs and the settings — what we took like 18 months to 24 months to find optimal settings for on one tool. When you switch the tool, it's completely haywire," Danilak explained. "So, it took more than a year — 15 months — to basically get where we have been on the physical side. Simulation, we switched in three months."

As we reported at the time, the latter issue was the result of a lawsuit between Tachyum and semiconductor IP supplier Cadence amid allegations of shenanigans.

"The journey is quite a bit longer than typical," Danilak admitted, explaining that the Silicon Valley-based firm has faced a number of challenges.

"At the beginning, we lost more than a quarter, almost two quarters, because of OFAC," Danilak said, referring to issues getting series-A funding into America connected to the US Office of Foreign Assets Control. "The second thing: COVID-19 hit series-B [with] very unhappy timing. And third of all, we have to replace the tools now."

The latest hurdle, according to Danilak, came as a result of an exclusive feature request from a large customer. What those features are, he didn't say, but we're told it did require additional design and planning time.

However, instead of making two chips, Danilak said "there will be Prodigy C — that's for the customer — and there is general Prodigy market. And it's the same die, but during manufacturing, we will blow the fuse, which will disable functionality for that special customer for everybody else."

The clock is ticking

The clock is now ticking on Prodigy's release. Tachyum will have to tape out soon if it's going to make the initial delivery of chips on time.

To speed up the process the company will employ a "split tape out." This, he explains, involves having the fab start work on the bottom layers of the wafer, and then complete it later. This he claims will allow the chip house to make changes more quickly as issues in manufacturing and design are discovered.

"We expect that we might need one metal spin on the chip," he explained. "Today, rarely the first chip goes to production." By that he means two revisions of the processor are expected to be made: the first a pilot run, and then another after fixing up any problems. This is standard stuff.

According to the release, the first phase of the supercomputer's deployment is scheduled to happen in 2024 with the bulk of the deployment slated for 2025.

"First phase, we [will] deliver a certain number of the chips so they [the customer] can basically install it and they can start debugging, and management software, and so on," Danilak said. "After metal spin, we will open the socket and replace our chip… and we will eat the cost."

In other words, Tachyum could well be squashing bugs after the first chips are deployed for testing and evaluation. As we said, this isn't unusual. Chipmakers like Intel usually sample test chips to large customers for evaluation purposes. It also wouldn't be unprecedented for a design defect to stall the deployment of a major system. Intel's long-delayed Sapphire Rapids Xeons for instance were responsible for holding up Aurora's deployment.

Whether Prodigy's tape out will get pushed back again remains to be seen, however, Danilak is cautiously optimistic.

"After we went through the COVID industry downturn, and so on, God knows what will happen basically next year, but barring some crazy surprises, they should be there," he said.

With that said he does see several risk factors on the horizon. "What happens if there's war in Taiwan or a blockade," he said, adding the company has contingency plans in place to mitigate this risk "at the cost of time." ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more