Pick A Core, Any Core, Says Intel – We'll Magically Put The Right Workload Onto One In A Hybrid SoC Or Accelerator

Intel has revealed some details about its upcoming chip designs, and claims they are the biggest and most significant change to its products for years.

This includes new CPUs and the Alder Lake family that places different types of core alongside each other in system-on-chip packages.

The chip giant is kinda taking a leaf out of the Arm world's book, here.

Arm-compatible smartphones, tablets, and PCs typically have a mix of CPU core types: some lightweight ones that are battery friendly and focused on running things like background tasks and less-demanding apps, and some that draw more power and are used only when applications need a surge in processing performance. The operating system should assign apps to the appropriate cores, ensuring software gets the right level of performance without draining the battery too much.

Intel is trying to go the same sort of route: one of its new CPU core types is called the Efficient Core – aka Gracemont – and Intel says that when compared to its 2015-era Skylake architecture, it "achieves 40 percent more performance at the same power or delivers the same performance while consuming less than 40 percent of the power."

As the name implies, Intel intends this E core to be used primarily in devices like thin and light laptops.

Some notable features: the E core has a 64KB L1 instruction cache, 32KB of L1 data cache, and up to 4MB of L2 cache shared by four cores. Spectre-be-damned, Intel is leaning hard into speculative execution to speed up software. This means improvements, we're told, to the branch prediction and prefetchers, and dual three-wide x86 instruction decoders – so up to six instructions per cycle queued up. The pipeline has a 256-entry out-of-order window, and 17 execution ports into the integer ALUs, numerous floating-point and vector math units, and memory access units.

The E core supports AVX vector math with extensions to accelerate integer-based machine-learning calculations.

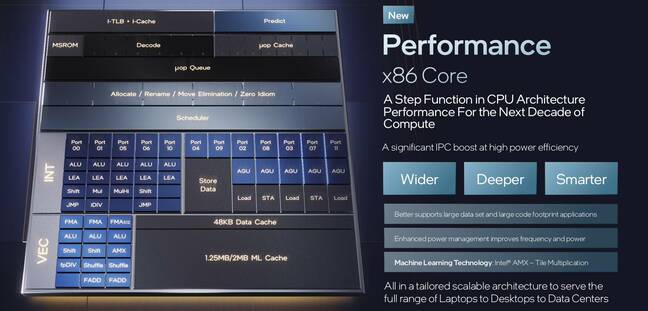

And to complement the E core is the Intel Performance Core, previously discussed under the name Golden Cove. The P core includes Advanced Matrix Extensions, an acceleration engine designed to speed AI workloads. This core is also supposed to scale from laptops and desktop PCs to servers.

Like with the E, Intel says the P core uses all manner of new techniques and speculative execution to anticipate processing requirements and get stuff done efficiently. The P core has, among other things, six instruction decoders, a bigger and wider micro-op cache, 12 execution ports, bigger register files, improved branch prediction and prefetching, a 512-entry reorder buffer, faster math operations, 32KB of L1 instruction cache with a larger instruction TLB, 48KB of L1 data cache, and up to 2MB of L2 cache. More instructions are executed at the rename and allocation stage of the pipeline, too, interestingly.

All of which is lovely, we guess – but Intel's next-gen architectures typically improve this sort of thing. If you want the full details, Intel's presentations, slides, marketing, and so forth on its new architectures are here.

Crucially, this time around, Intel will put E cores and P cores in the same system-on-a-chip, and operating systems can decide which core and which type of core to use. This will be possible in forthcoming 10nm Alder Lake systems-on-a-chips that incorporate a mix of E and P cores, and new Intel tech called Thread Director.

Intel explained that Thread Director can detect a demanding workload like a game starting up and give it some P core time. If email is syncing in the background, it gets an E core. Thread Director can schedule to both types of cores and can detect idling workloads on a P core and shunt it off to an E core until it can justify use of the higher-performance part of the chip.

While talking this up as a new dawn of hybrid chips, Intel acknowledged that other chip designers have been here before, as we mentioned. Chipzilla's point of difference is a belief that its hybrids are all about performance, while rivals mix and match cores to control power consumption.

Windows 11 will be ready for Thread Director out of the box. Linux OS developers are aware it's coming. Whether Thread Director in Alder Lake matters to Linux and other OSes aimed at servers is moot as while Intel advances the P core as just the thing for "large code footprint applications," Alder Lake is a client-level architecture, meaning it's for PCs and laptops.

It's worth pointing out that Intel just canned Lakefield, an earlier attempt to place a mix of CPU cores in a system-on-chip for personal devices. That chip family, which used a Sunny Cove high-performance core alongside four Tremont Atom-based energy-efficient cores, didn't work out, and Intel is pressing ahead with Alder Lake and its E and P cores.

Servers take a P, too

Happily, Intel is also using P cores in its new server silicon – code-named Sapphire Rapids – which will be marketed in the Xeon Scalable Processor name.

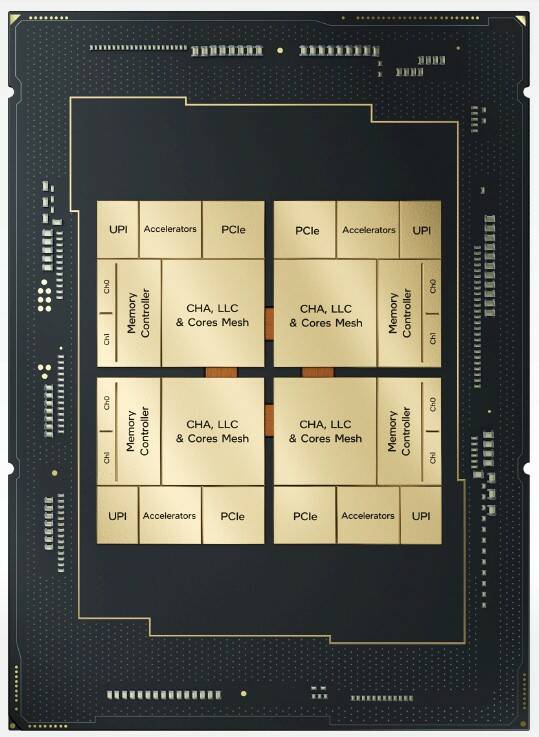

Sapphire Rapids puts Intel's "tiles" tech to work. Tiles are essentially individual processors, and Intel has figured out how to place multiple tiles in a single package that uses "embedded multi-die interconnect bridge" packaging to present all the tiles as a single, logical processor.

This rather reminds us of AMD's chiplets, in which multiple physical processor dies are included in one logical package. Our sister site The Next Platform has more on Intel's tile approach, here.

Tiles are how Intel plans to serve clouds and workloads like AI or microservices that require scale. Sapphire Rapids therefore represents a riposte to the many-core Arm-powered processors that the likes of Oracle and AWS are currently advancing as just the ticket for microservices in a one-core-one-container world.

The upcoming Sapphire Rapids Xeons are set to include the following features, all designed to speed servers.

The Accelerator Interfacing Architecture (AIA) might be the most significant because it's how Intel will offload housekeeping workloads, such as handling storage I/O or running virtual switches, into what it now prefers to call Infrastructure Processing Units (IPUs – aka DPUs.)

Intel execs at the 2.5-hour media briefing, dubbed Architecture Day, The Register attended earlier this week were cagey about exactly how AIA will put IPUs to work, mentioning collaboration with Microsoft and VMware. They were bullish at the prospect of reclaiming instruction cycles, pointing out that some microservices at Facebook spend between 31 and 83 per cent of server power on overheads. If that overhead work can be shifted to IPUs, the CPU cores will be free to do other things, we're told.

One possibility afforded by AIA was diskless servers: apparently an IPU, using the accelerator architecture, can provide a virtual NVMe device that uses external storage – even as a boot drive.

Intel suggested that IPUs and AIA will be big in clouds and among communications service providers, and that as-yet-unrevealed alliances will make IPUs relevant to even mainstream data center users.

- Intel announces AWS has become a client, Qualcomm likes its future tech, advances that as proof it's back in business

- Intel's Foveros tech hits a speedbump as Lakefield gets canned – one year after launch

- Intel finds a couple more 11th-gen Core chips, one hits 5.0GHz in laptops

- Down the Swanny: '2020 has been the most challenging year in my career' says Intel CEO as profit plunges 30%

And in a related non-surprise, Intel is now selling IPUs. The semiconductor giant revealed Mount Evans, its first dedicated IPU ASIC, and an FPGA-based IPU reference platform called Oak Springs Canyon.

The mere fact that Intel has prepared server processors ready for IPUs matters because hype about the devices and a new data center architecture they can enable has built for years, with little sign of how it will be realized. AIA shows IPUs/SmartNICs/DPUs are on their way to real use.

Faster, chippycat! Drill, drill!

Another important accelerator in Sapphire Rapids is Advanced Matrix Extensions (AMX), silicon dedicated to tensor processing and therefore to deep-learning algorithms. AMX can work even as Sapphire Rapids P cores go about other business. AMX thus has its own instruction set.

The Next Platform has more analysis of AMX, here.

The other accelerator in Sapphire Rapids is the Data Streaming Accelerator (DSA) that takes care of moving data. This is another bottleneck-clearer aimed at ensuring data flows among the CPU cores, memory, caches, attached storage, and networked storage devices without leaving CPUs waiting for something to do.

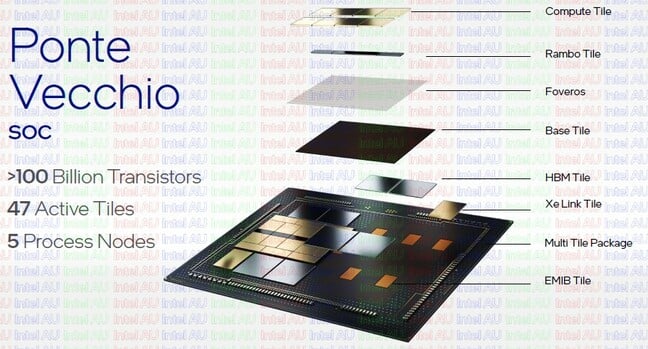

If you need even more grunt, Intel has a bridge to sell you – the Ponte Vecchio GPU.

Ponte Vecchio is dripping with customizations for high-performance computing – whenever it arrives, it's supposed to be going into a top US supercomputer – but is as notable for its implementation of tiles and collaboration with TSMC.

The GPU features five tiles, each specializing in different chores and each made using different fabrication processes. The compute tile is built by Intel's Taiwanese rival TSMC, and Chipzilla is therefore keen to claim that Ponte Vecchio demonstrates its new IDM 2.0 strategy under which it just gets stuff done by working with whatever foundry is best-suited to the job, rather than only using stuff it built all by itself.

Intel also has new GPUs and a new brand for them – Arc – mostly aimed at gamers and content creators.

Don't whip out the checkbook just yet

All of the above sounds like it'll be fun to play with.

Curb your enthusiasm, readers, because Intel can't quite say when much of it will land.

Ponte Vecchio is a sometime-in-2022 affair. Alder Lake will arrive "later this year" and it is unclear if PCs featuring it will arrive in time to let Windows 11 take advantage of Thread Director when the OS launches in "late 2021".

Intel CEO Pat Gelsinger only appeared at the end of the Architecture Day presentation and had little to say other than the new stuff representing a change in the way we need to think about chips. In the past, he said, new processes defined important advances in silicon technology. Packaging silicon into hybrid machines is where the action is today, he opined.

It didn't seem at all odd for Gelsinger to just put the cherry on top of all the technology announcements mentioned above, because he wasn't at the company when they were decided and developed.

Gelsinger is, however, in the big chair now that Intel must sell what it believes are market-making innovations, even as Arm presses deeper into Intel territory, Qualcomm advances its ambitions to run everywhere, AWS pushes its own silicon ahead of Xeons, AMD finds novel ways to attack, and Nvidia tries to muscle into almost every computing niche. ®

Speaking of Intel... In an all-hands email this week, CEO Gelsinger said he won't require employees to be vaccinated against the COVID-19 coronavirus, but the chip maker will offer staff $250 each if they are vaccinated by the end of the year, and $100 in food vouchers for hourly workers.

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more