Intel Wants To Run AI On CPUs And Says Its 5th-gen Xeons Are Ones To Do It

Intel launched its 5th-generation Xeon Scalable processors with more cores, cache, and machine learning grunt during its AI Everywhere Event in New York Thursday.

The x86 giant hopes the chip will help it win over customers struggling to get their hands on dedicated AI accelerators, touting the processor as "the Best CPU for AI, hands down." This claim is no doubt bolstered by the fact Intel is one of the few chipmakers to have baked AI acceleration, in this case its Advanced Matrix Extensions (AMX) instructions, into their datacenter chips.

Compared to Sapphire Rapids, which we'll remind you only launched this January after more than a year of delays, Intel says its 5th-gen Xeons are as much as 1.4x faster in AI inferencing and can deliver acceptable latencies for a wide variety of admittedly smaller machine learning applications.

Before we dig into Intel's CPU-accelerated AI strategy, let's take a look at the chip itself. Despite this being a refresh year for the Xeon family, Intel has actually changed quite a bit under the hood to boost the chip's performance and efficiency compared to last-gen.

Fewer chips, more cores and cache

Emerald Rapids brings several notable improvements over its predecessor, which we largely see in the form of higher core counts and L3 cache.

The new chips can now be had with up to 64 cores. For a chip launching on the eve of 2024, that's not a whole lot of cores. AMD hit this mark in 2019 with the launch of Epyc 2, and most chipmakers, including several of the cloud providers, are now deploying chips with 72, 96, or 128 or more cores.

The good news is, unlike January's Sapphire Rapids launch, the highest core count parts aren't reserved for large four-or eight-socket platforms this time around. Previously, Intel's mainstream Xeons topped out at 56 cores. The bad news is, if you did want a large multi-socket server, you're gonna be stuck on Sapphire Rapids, at least until next year, as Intel's 5th-gen Xeons are limited to just two-socket platforms.

While you might think Intel would be using more chiplets to increase core counts, similar to how AMD boosted their Epyc 4 parts to 96 cores last year, they're not.

Intel's 5th-gen Xeons use fewer larger compute tiles than we saw with Sapphire Rapids earlier this year. - Click to enlarge

Strip away the integrated heat spreader, and you'll find a much simpler arrangement of chiplets compared to Sapphire Rapids. Instead of meshing together four compute tiles, Emerald Rapids pares this back to two of what it calls XCC dies, each with up to 32 cores.

There are a couple of benefits to this, namely fewer dies means less data movement and therefore lower power consumption. One consequence of this approach is that these extreme core count (XCC) dies, while fewer, are physically larger. Usually larger dies means lower yields, but the Intel 7 process tech used in both Sapphire Rapids and now Emerald Rapids is quite mature at this point.

For lower core count parts, Intel continues to employ a single monolithic die. These medium-core-count dies (MCC), as Intel calls them, can still be had with up to 32 cores. What's new this generation is the availability of an even smaller die called EE-LCC which is good for up to 20 cores.

In addition to more cores, Emerald Rapids boasts a much larger L3 cache at 320MB. That's up from 112.5MB of L3 last generation. This larger cache, combined with the simpler chiplet architecture, is largely responsible for the chip's 1.21x performance gains over last gen.

Finally, to keep the cores fed, Intel has extended support to faster DDR5 memory, up to 5,600 MT/s. While the chip is still stuck with eight memory channels — four fewer than AMD's Epyc 4 or AWS's Graviton 4 — it's now able to deliver peak bandwidth of 368 GB/s or about 5.75 GB/s per core on the top-specced part.

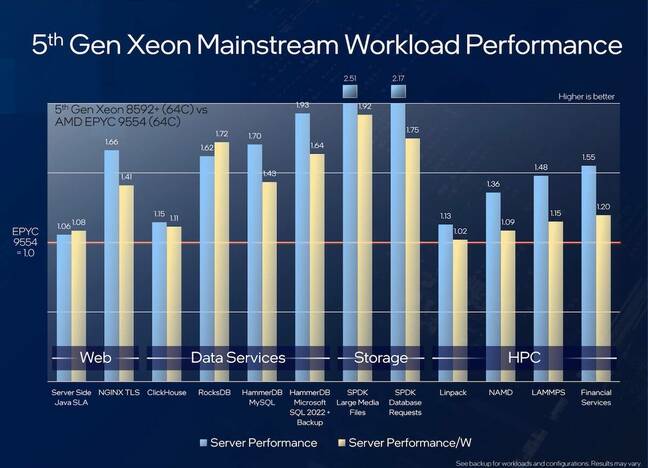

Take these with a grain of salt, but at least in a core-for-core comparison Intel says its Emerald Rapids Xeons offer up to 2.5x the performance of AMD's Epycs. - Click to enlarge

Altogether, Intel claims its 5th-gen Xeons offer competitive advantage over AMD's Epyc 4 processors in a variety of benchmarks pitting its 64-core part against a similarly equipped Eypc 9554. As usual, take these with a grain of salt. Although the benchmarks demonstrate a core-for-core lead, they don't account for the fact AMD's Epyc 4 platform is available with between 50 and 100 percent more cores. So, while Intel's cores may in fact be faster, AMD can still pack more of them into a dual-socket server.

Can CPUs make sense for AI inferencing? Intel seems to think so

With demand for AI accelerators far outstripping supply, Intel is pushing its Emerald Rapids Xeons as an ideal platform for inferencing and has made several notable improvements to the silicon to bolster the capabilities of its AMX accelerators.

In particular, Intel has tweaked the turbo frequencies of its AVX-512 and AMX blocks to reduce the performance hit associated with activating these instructions. This, in addition to architectural improvements, translates into 42 percent higher inferencing performance in certain workloads, compared to its predecessor, the company claims.

However, with LLMs, like GPT-4, Meta's Llama 2, and Stable Diffusion all the rage, Intel is also talking up its ability to run smaller models on CPUs. For these kinds of workloads, memory bandwidth and latency are major factors. Here, the chip's faster 5600 MT/s DDR5 helps, but it's no replacement for HBM. And while Intel actually has made CPUs with HBM on board, its Xeon Max series processors used in the Aurora and Crossroads supercomputers aren't making a return this generation.

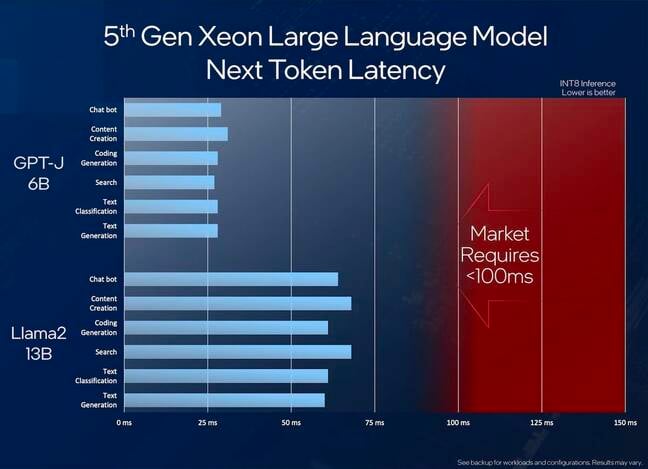

According to Intel, large language models are well within the capability of its 5th-gen Xeons up to about 20 billion parameters - Click to enlarge

Even so, Intel says it can achieve next-token latencies — this is how quickly words or phrases can be generated in response to a prompt — of around 25 milliseconds in the GPT-J model using a dual-socket Xeon platform.

But as you can see from the chart, as the number of parameters increases so does latency. Even still, Intel says it was able to achieve latencies as low as 62 milliseconds when running the Llama 2 13B model, well below the 100 milliseconds the chipmaker deems adequate.

We're told that Intel has been able to achieve acceptable latencies on models up to about 20 billion parameters. Beyond this, the company has demonstrated acceptable second token latencies by distributing models, like Meta's 70 billion parameter Llama 2 model across four dual-socket nodes.

Despite this limitation, Intel insists its customers are asking them for help running inference on CPUs, which we don't doubt. The ability to run LLMs and other ML workloads at acceptable levels of performance has the potential to significantly reduce costs, especially given the astronomical price of GPUs these days.

However, for those looking to run larger models, like GPT-3 at 175 billion parameters, it seems that dedicated AI accelerators like Intel's own Habana Gaudi2 aren't going anywhere any time soon.

Speaking of which, Intel promised Gaudi3 is coming in 2024 to take on Nvidia's H100 and AMD's MI300X. Chipzilla shed no further light on that silicon, though.

- AMD slaps together a silicon sandwich with MI300-series APUs, GPUs to challenge Nvidia's AI empire

- AWS unveils core-packed Graviton4 and beefier Trainium accelerators for AI

- Like Microsoft, Google can't stop its cloud from pouring AI all over your heads

The best is yet to come

Despite the improvements brought by Intel's Emerald Rapids Xeons, much of the chip's thunder has already been stolen by the vendor's next-gen datacenter parts.

Intel has spent the last few months teasing its performance and efficiency core Xeons, codenamed Granite Rapids and Sierra Forest, respectively. The parts promise to include much higher core counts, support for more, faster memory, and will be among the first to employ Intel's long-delayed 7nm (A.K.A. Intel 3) process tech.

Sierra Forest is due out in the first half of next year and will offer up to 288 efficiency cores in a single socket — 144 cores per compute tile.

Granite Rapids, meanwhile, is slated to arrive later in 2024. As we learned at Intel Innovation this summer, the chip will employ a new modular chiplet design with up to three compute tiles flanked by a pair of I/O dies on the upper and lower edges of the chip.

Intel has yet to say just how many more cores Granite Rapids will offer, but at Hot Chips this summer it did reveal we'd be getting 136 PCIe lanes and 12 memory channels with support for 8,800 MT/s MCR DIMMS. The latter will boost the chip's memory bandwidth to roughly 845 GB/s, something that should help considerably with LLM inference performance.

Of course, these chips aren't launching in a vacuum. AMD is expected to roll out its 5th-gen Epyc processors, codenamed Turin, sometime next year. Elsewhere, many of the major cloud providers have announced Arm-based CPUs of their own. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more