If Today's Tech Gets You Down, Remember Supercomputers Are Still Being Used For Scientific Progress

The US Department of Energy this week laid out how it intends to put its supercomputing might to work simulating the fundamental building blocks of the universe.

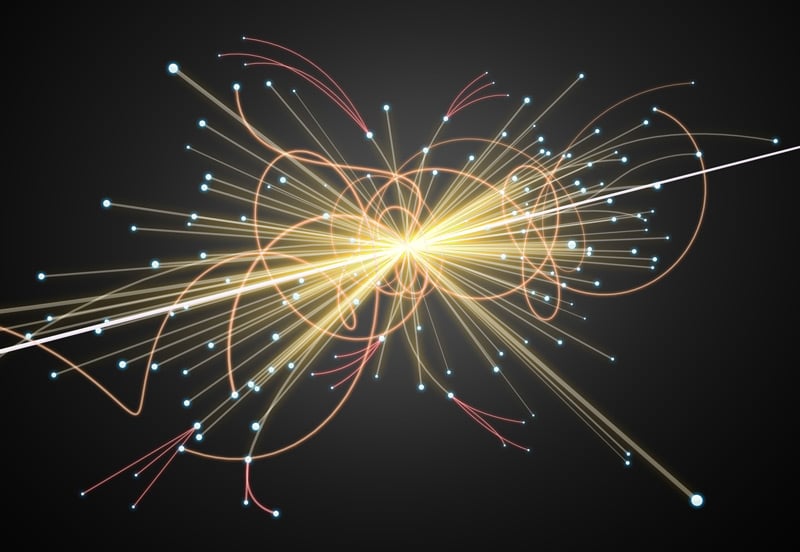

The electrons, protons and neutrons that make up atoms, from which all matter is comprised, are fairly well understood. However the particles that make up those particles – leptons, quarks and bosons – remain mysterious, and the subject of ongoing scientific inquiry.

A $13 million grant from the Dept of Energy's Scientific Discovery through Advanced Computing (SciDAC) program aims to expand our understanding of the extraordinarily tiny things that exist within the particles within atoms.

As far as scientists can tell, quarks and gluons — the stuff that holds them all together — can't be broken down any further. They are quite literally the fundamental building blocks of all matter. Remember of course that scientists once thought the same of atoms, so who knows where this might go.

The initiative will enlist several DoE facilities – including Jefferson, Argonne, Brookhaven, Oak Ridge, Lawrence Berkeley, and Los Alamos National Labs, which will collaborate with MIT and William & Mary – to advance the supercomputing methods used to simulate quark and gluon behavior within protons.

The program seeks to answer some big questions about the nature of matter in the universe, such as “what is the origin of mass in matter? What is the origin of spin in matter,“ Robert Edwards, deputy group leader for the Center for Theoretical and Computational Physics at Jefferson Labs, told The Register.

- DoE digs up molten salt nuclear reactor tech, taps Los Alamos to lead the way back

- DoE supercomputing centers get $1.5B boost from Biden administration

- Utility security is so bad, US DoE offers rate cuts to improve it

- Aurora delays keep Frontier supercomputer in #1 spot on Top500

Today, physicists use supercomputers to generate a "snapshot" of the environment inside a proton, and use mathematics to add quarks and gluons to the mix to see how they interact. These simulations are repeated thousands of times over and then averaged to predict how these elemental particles behave in the real world.

This project, led by the Thomas Jefferson National Accelerator Facility, encompasses four phases which aim to streamline and accelerate these simulations.

The first two phases will involve optimizing the software used to model quantum chromodynamics – the theory governing photons and neutrons – to break up the calculations into smaller chunks, and take better advantage of the even greater degrees of parallelism available on next-gen supercomputers.

One of the challenges Edwards and his team are working through now is how to take advantage of the growing floating-point capabilities of GPUs without running into connectivity bottlenecks when scaling them up.

“A good chunk of our efforts have been trying to find communication-avoiding algorithms and to lower the amount of communication that has to come off the nodes,” he said.

A good chunk of our efforts have been trying to find communication-avoiding algorithms

The team is also looking at applying machine-learning principles to parameterize the probability distributions at the heart of these simulations. According to Edwards, this has the potential to dramatically speed up simulation times and also helps to eliminate many of the bottlenecks around node-to-note communications.

“If we could scale it, this is like the Holy Grail for us,” he said.

In addition to using existing models, the third phase of the project will involve the development of new methods for modeling the interaction of quarks and gluons within a computer-generated universe. The final phase will take information collected by these efforts and use them to begin scaling up systems for deployment on next-gen supercomputers.

According to Edwards, the findings from this research also have practical applications for adjacent research, such as Jefferson Lab's continuous electron beam accelerator or Brookhaven Lab's relativistic heavy-ion collider – two of the instruments used to study quarks and gluons.

"Many of the problems that we are trying to address now, such as code infrastructures and methodology, will impact the [electron-ion collider]," he explained.

The DoE's interest in optimizing its models to take advantage of larger and more powerful supercomputers comes as the agency receives a $1.5 billion check from the Biden administration to upgrade its computational capabilities. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more