Google Unveils TPU V5p Pods To Accelerate AI Training

Google revealed a performance-optimized version of its Tensor Processing Unit (TPU) called the v5p designed to reduce the time commitment associated with training large language models.

The chip builds on the TPU v5e announced earlier this year. But while that chip was billed as Google's most "cost-efficient" AI accelerator, its TPU v5p is designed to push more FLOPS and scale to even larger clusters.

Google has relied on its custom TPUs, which are essentially just big matrix math accelerators, for several years now to power the growing number of machine learning features baked into its web products like Gmail, Google Maps, and YouTube. More recently, however, Google has started opening its TPUs up to the public to run AI training and inference jobs.

According to Google the TPU v5p is its most powerful yet, capable of pushing 459 teraFLOPS of bfloat16 performance or 918 teraOPS of Int8. This is backed by 95GB of high bandwidth memory capable of transferring data at a speed of 2.76 TB/s.

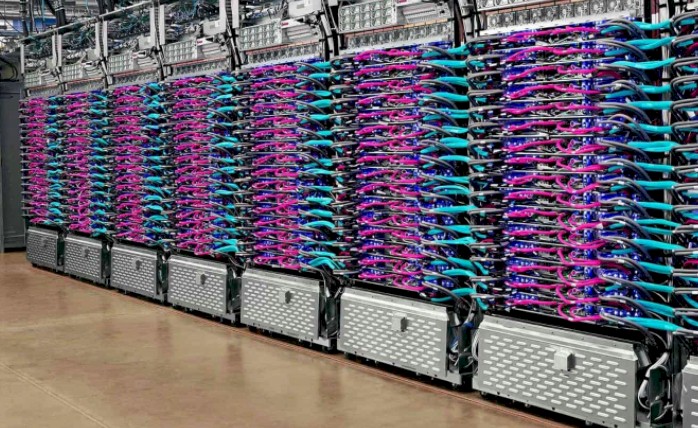

As many as 8,960 v5p accelerators can be coupled together in a single pod using Google's 600 GB/s inter-chip interconnect to train models faster or at greater precision. For reference, that's 35x larger than was possible with the TPU v5e and more than twice as large as possible on TPU v4.

Google claims the new accelerator can train popular large language models like OpenAI's 175 billion parameter GPT3 1.9x faster using BF16 and up to 2.8x faster than its older TPU v4 parts — if you're willing to drop floating point for 8-bit integer calculations.

This greater performance and scalability does come at a cost. Each TPU v5p accelerator will run you $4.20 an hour, compared to $3.22 a hour for TPU v4 or $1.20 a hour for TPU v5e. So if you're not in a rush to train or refine your model, Google's efficiency-focus v5e chips still offer better bang for your buck.

- EU running in circles trying to get AI Act out the door

- IBM takes a crack at 'utility scale' quantum processing with Heron processor

- Creating a single AI-generated image needs as much power as charging your smartphone

- AWS unveils core-packed Graviton4 and beefier Trainium accelerators for AI

Along with the new hardware, Google has introduced the concept of an "AI hypercomputer." The cloud provider describes it as a supercomputing architecture that employs a close knit system of hardware, software, ML frameworks, and consumption models.

"Traditional methods often tackle demanding AI workloads through piecemeal, component-level enhancements, which can lead to inefficiencies and bottlenecks," Mark Lohmeyer, VP of Google's compute and ML infrastructure division, explained in a blog post Wednesday. "In contrast, AI hypercomputer employs system-level codesign to boost efficiency and productivity across AI training, tuning, and serving."

In other words, a hypercomputer is a system in which any variable, hardware or software, that could lead to performance inefficiencies is controlled and optimized for.

Google's new hardware and AI supercomputing architecture debuted alongside Gemini, a multi-modal large language model capable of handling text, images, video, audio, and code.

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more